Grab your glossaries, dictionaries, and a cup of coffee because we are about to get techy! SERVERware V4 was just released, and there are some significant advantages to this update.

Let’s talk about one of the most essential features for our partners. SERVERware 4.0 now offers NVMeTCP as a replacement for the previous ISCSI storage pool assembly. Thanks to NVMeTCP, storage performance is up to eight times better compared to previous versions. It is now possible to have up to 30% more VPSs on the same resources.

We are getting ahead of ourselves. And you may be wondering, what is NVMe over TCP? 🤔

NVMe stands for Non-Volatile Memory Express. It is a unique protocol designed for talking to solid-state drives, which are used to talk to flash memory. NVMe is a massive upgrade from the spinning disk drive that our first computers had. It offers super-low latency and outstanding high performance!

You can often find NVMe in enterprise applications on some servers. It can be used as a boot drive but also sometimes as a cache drive. Picture your virtual server, and within it, you have a lot of storage in terms of capacity. Specific storage drives have low latency for applications that need really high performance to read and write, like gloCOM, for example. These NVMe drives kind of act like a cache in the server. Once operations are done, the data can be moved over to the other drives for longer-term storage.

So, where does the TCP come in?

The evolution of NVMe was to implement it over TCP (Transmission Control Protocol) using TCP as a fabric transport, allowing you to extend the NVMe across the entire data center. NVMeTCP is the most powerful of the NVMe technology because it provides the highest performance with reduced deployment costs and design complexity.

Let’s break this down to understand how it will help you as a reseller!

NVMe/TCP allows users to have an SSD (flash-based storage) even if it was far away from you! It will appear as a local to that system, making it a popular choice in a hyper-scale data center environment. Since NVMe is using TCP, it essentially is using the network! Making distance limitations not as much of a problem.

As mentioned earlier, we implemented NVMeTCP as a replacement for ISCSI in the storage pool assembly. In SERVERware, all mechanisms from ISCSI are utilized with NVMeTCP as well. Users can still use this transfer with SSD disks or other disks, meaning no additional hardware is needed. It is enabled for setup in the Mirror and Cluster editions of SERVERware and utilizes a greater range of hardware resources, lower latency, and removes RAN link bottleneck.

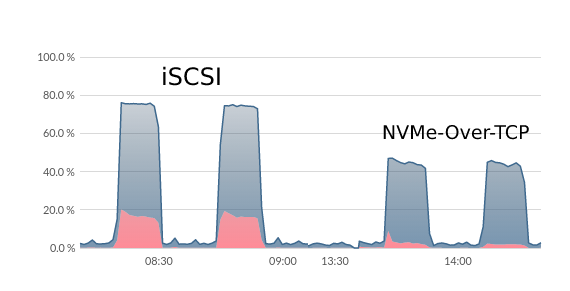

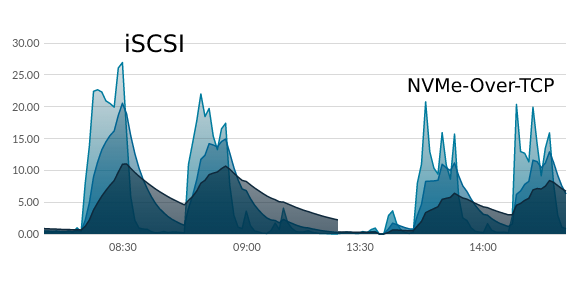

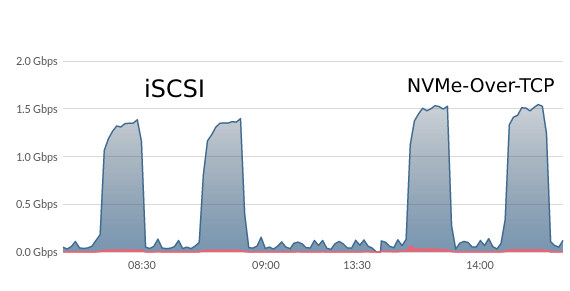

Our team conducted a benchmarking report to show you how much improvement NVMeTCP will be for the SERVERware storage capabilities. Take a look at the comparison of iSCSI vs NVMeTCP that focused on the storage server’s performance.

For context, the benchmarking was conducted on our internal testing cluster. It consisted of a simultaneous load on the SAN and RANK link from 20 VPSes. This particular Cluster had two processing hosts and each used ten VPSes to generate load. The load was developed with the dd command that uses the direct sync option for synchronous writes on the SAN link.

iSCSI Performance

The first benchmarking was performed on SERVERware 3.3.1, which uses the iSCSI protocol to replicate data on the RAN link.

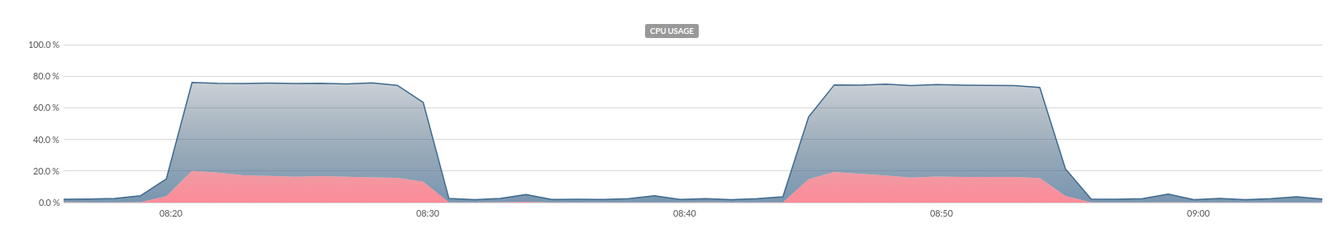

CPU USAGE

The CPU Usage (blue) was constant at about 76%, and the IO Wait (red) was at about 17%.

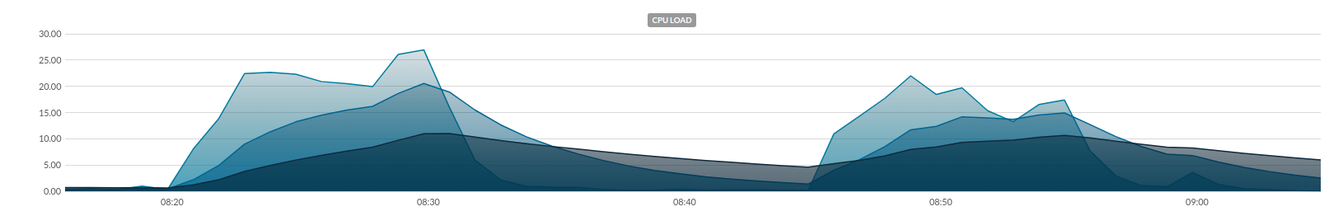

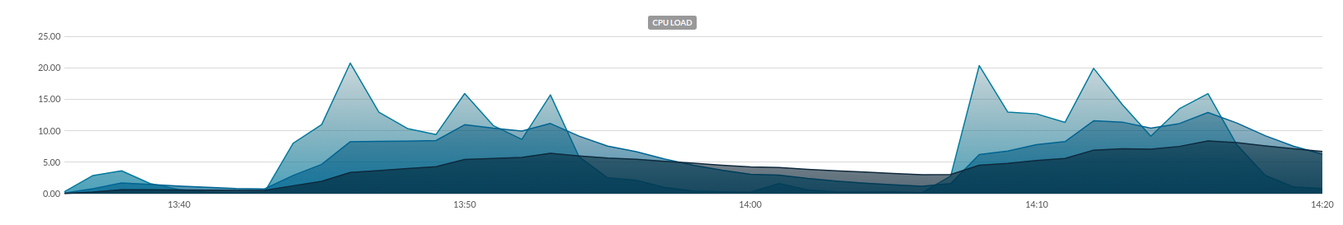

CPU LOAD

The CPU Load spiked at 26.92 over one minute (light blue) and 20.00 over 5 minutes (medium blue) of benchmarking. The storage server has 12 CPU Cores, and it is a pretty severe load for the host.

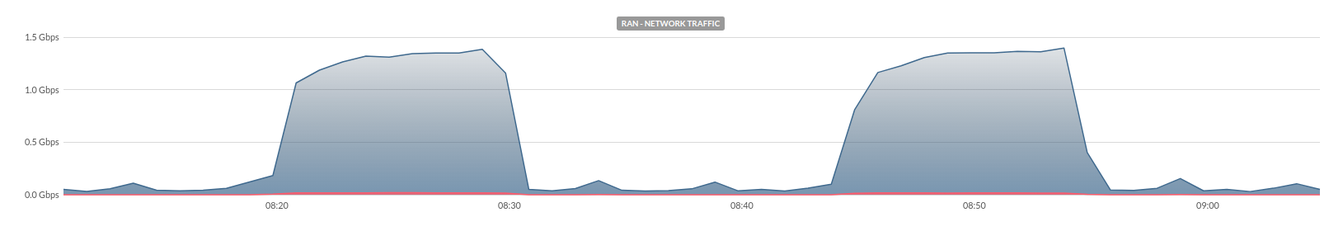

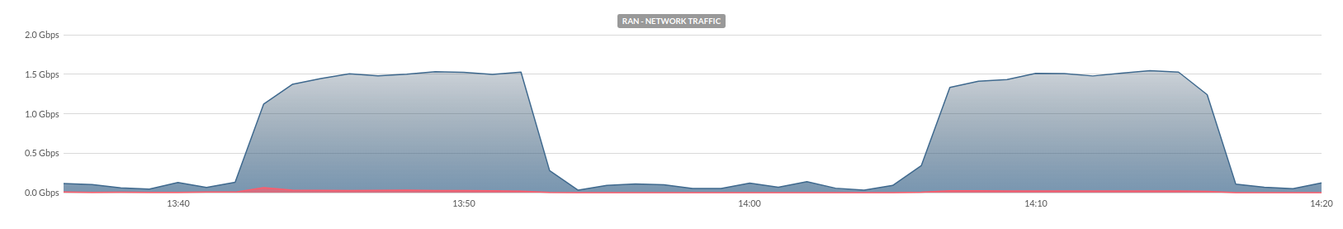

RAN BANDWIDTH

The RAN Bandwidth reached 1.38Gbps during the benchmarking.

NVMe-Over-TCP Performance

We upgraded the Cluster to the 4.0 beta version of SERVERware integrated with the NVMeTCP protocol that uses it for replications on the RAN link.

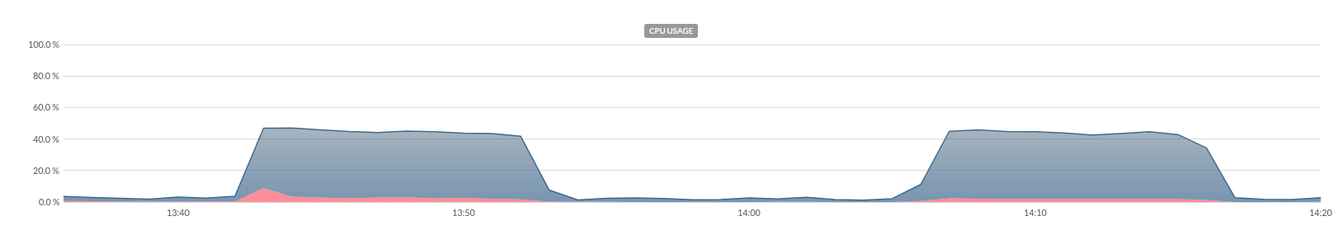

CPU USAGE

The CPU Usage (blue) was constant at about 45%, and the IO Wait (red) at about 4%.

CPU LOAD

The CPU Load spiked at 20.74 over one minute (light blue) and 10.05 over 5 minutes (medium blue) of benchmarking. The storage server has got 12 CPU Cores, and this time results are in a tolerance range.

RAN BANDWIDTH

The RAN Bandwidth reached 1.54 Gbps during the benchmarking.

Comparing the two protocols

We can see that NVMe-Over-TCP is consuming three times less CPU Usage. It also generates about five times less IO wait on the system. Regarding the CPU Load, NVMe-Over-TCP is generating about 40% less load during the five-minute period. Lastly, the Ran Bandwidth has increased when using NVMe-Over-TCP from 1.38Gbps to 1.54Gbps.

To wrap up the benchmarking study, we see that there are performance improvements for SERVERware 4.0. The overall performance is significant, and we can confirm the RAN Link performs better on NVMeTCP. The results are expected to be even better on storage hosts with more CPU cores because NVMe-Over-TCP utilizes one IO queue per core while iSCSI has only one IO queue.

If you are looking to switch providers that offer NVMeTCP, get in touch with our sales team!

📞 +1 (647) 313 1515

📧 sales@bicomsystems.com

💻 www.bicomsystems.com/contact-us